deleted by creator

deleted by creator

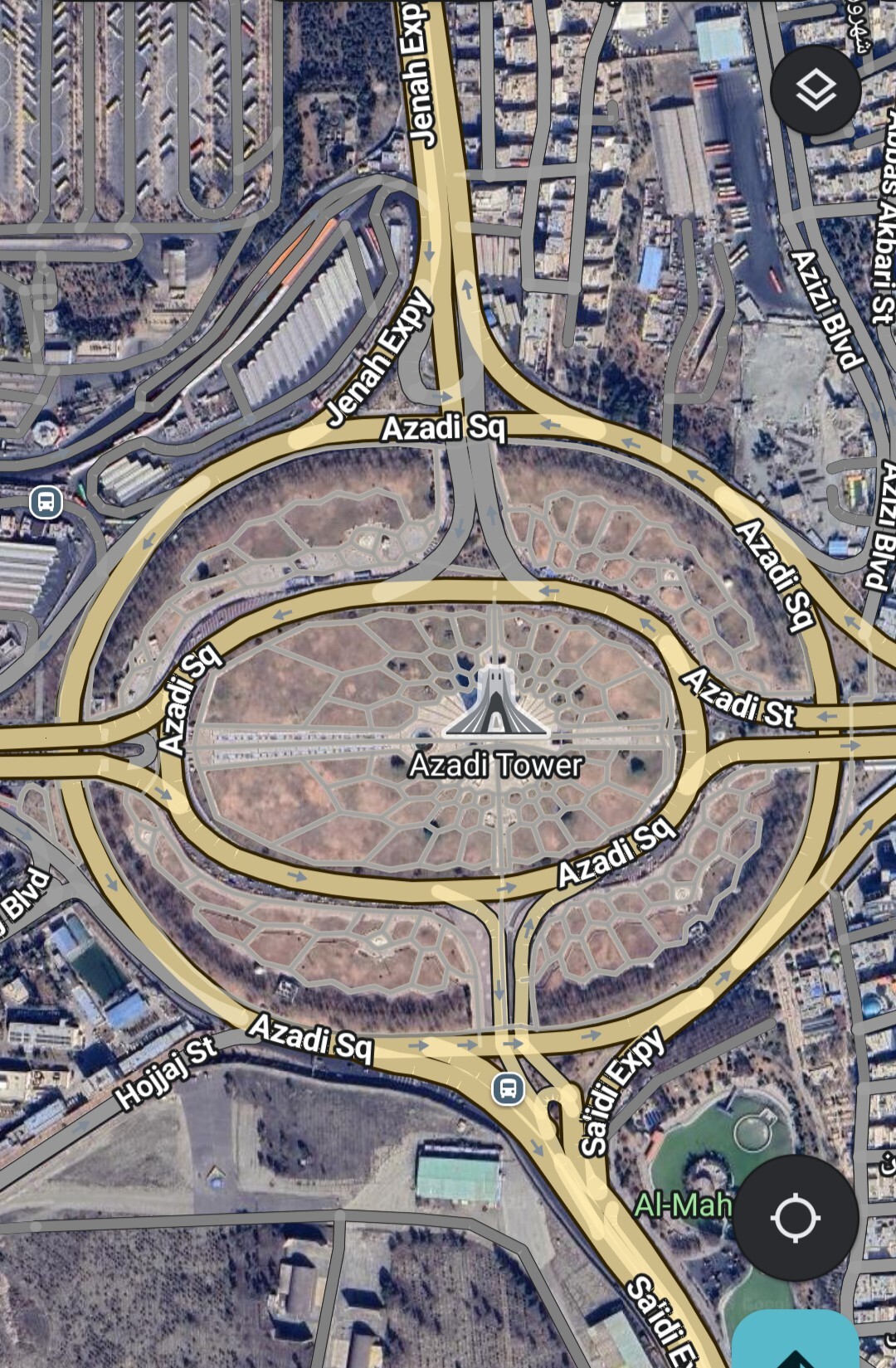

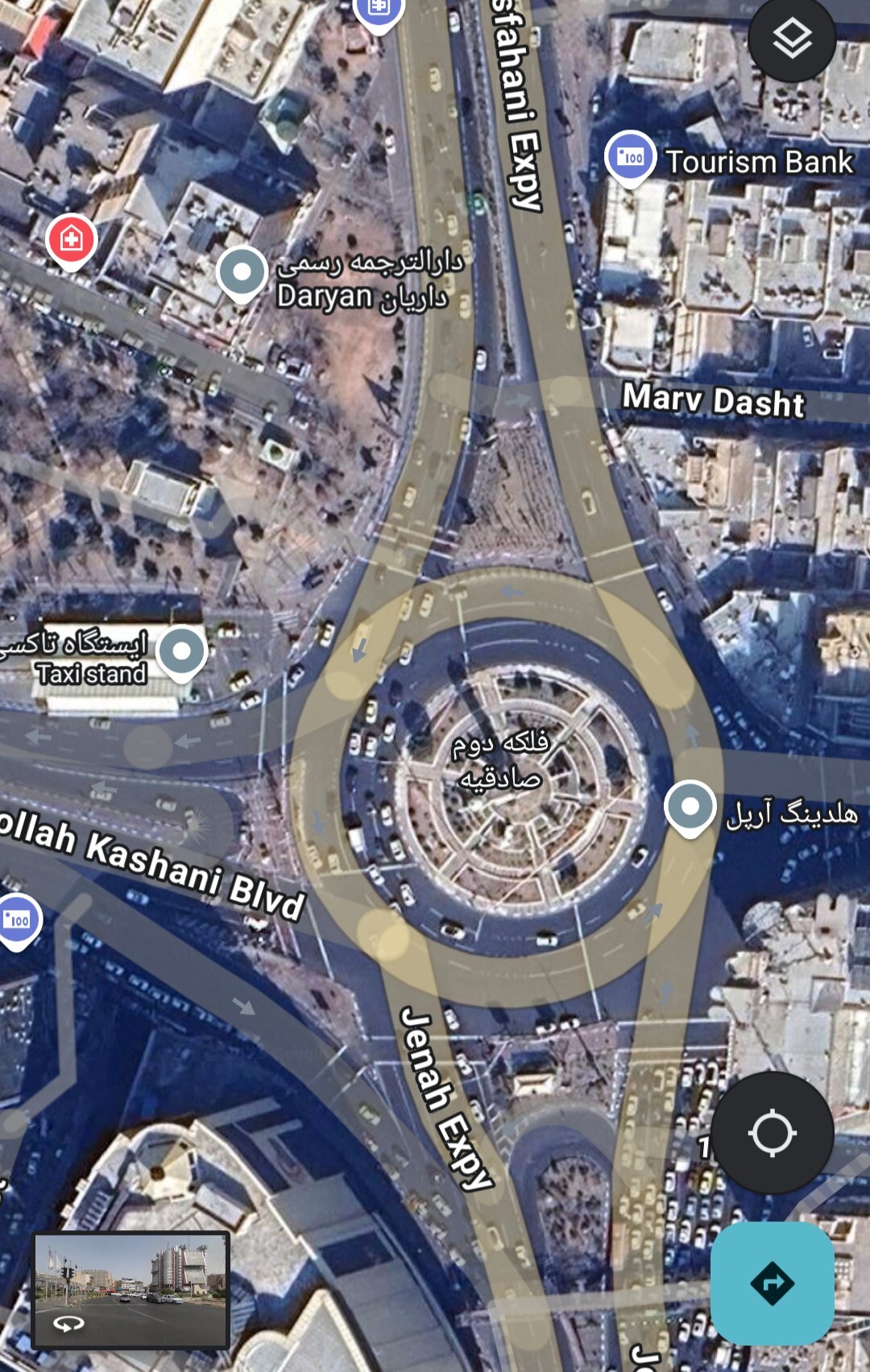

None of you guys would survive Iran

This is unironically a technique for catching LLM errors and also for speeding up generation.

For example in speculative decoding or mixture of experts architectures these kind of setups are used.

Fuck the kids, their piece of shit parents can pull their fucking weight if they have a problem

Someone who is very tall can give the others a jump when trying to jump a wall to rob a place lmao

Obscure slang I guess

A question for big car drivers

How the fuck do you drive?

I have a slightly longer and wider than usual SEDAN and I struggle in the city. I can’t imagine steering a massive hunk of shit

Brings my short ass comfort that we are better in one fucking thing over bandit ladders /s

Not making these famous logical errors

For example, how many Rs are in Strawberry? Or shit like that

(Although that one is a bad example because token based models will fundamentally make such mistakes. There is a new technique that lets LLMs process byte level information that fixes it, however)

The most recent Qwen model supposedly works really well for cases like that, but this one I haven’t tested for myself and I’m going based on what some dude on reddit tested

This is the most “insufferable redditor” stereotype shit possible, and to think we’re not even on Reddit

Small scale models, like Mistral Small or Qwen series, are achieving SOTA performance with lower than 50 billion parameters. QwQ32 could already rival shitGPT with 32 billion parameters, and the new Qwen3 and Gemma (from google) are almost black magic.

Gemma 4B is more comprehensible than GPT4o, the performance race is fucking insane.

ClosedAI is 90% hype. Their models are benchmark princesses, but they need huuuuuuge active parameter sizes to effectively reach their numbers.

Everything said in this post is independently verifiable by taking 5 minutes to search shit up, and yet you couldn’t even bother to do that.

You can experiment on your own GPU by running the tests using a variety of models of different generations (LLAMA 2 class 7B, LLAMA 3 class 7B, Gemma, Granite, Qwen, etc…)

Even the lowest end desktop hardware can run atleast 4B models. The only real difficulty is scripting the test system, but the papers are usually helpful with describing their test methodology.

and that you, yourself, can stand as a sole beacon against the otherwise regularly increasing evidence and studies that both indicate toward and also prove your claims to be full of shit?

Hallucination rates and model quality has been going up steadily, same with multishot prompts and RAG reducing hallucination rates. These are proven scientific facts, what the fuck are you on about? Open huggingface RIGHT NOW, go to the papers section, FUCKING READ.

I’ve spent 6+ years of my life in compsci academia to come here and be lectured by McDonald in his fucking basement, what has my life become

My most honest goal is to educate people which on lemmy is always met with hate. people love to hate, parroting the same old nonsense that someone else taught them.

If you insist on ignorance then be ignorant in peace, don’t try such misguided attempts at sneer

There are things in which LLMs suck. And there are things that you wrongly believe as part of this bullshit twitter civil war.

You need to run the model yourself and heavily tune the inference, which is why you haven’t heard from it because most people think using shitGPT is all there is with LLMs. How many people even have the hardware to do so anyway?

I run my own local models with my own inference, which really helps. There are online communities you can join (won’t link bcz Reddit) where you can learn how to do it too, no need to take my word for it

O3 is trash, same with closedAI

I’ve had the most success with Dolphin3-Mistral 24B (open model finetuned on open data) and Qwen series

Also lower model temperature if you’re getting hallucinations

For some reason everyone is still living in 2023 when AI is remotely mentioned. There is a LOT you can criticize LLMs for, some bullshit you regurgitate without actually understanding isn’t one

You also don’t need 10x the resources where tf did you even hallucinate that from

Hallucinations become almost a non issue when working with newer models, custom inference, multishot prompting and RAG

But the models themselves fundamentally can’t write good, new code, even if they’re perfectly factual

These views on LLMs are simplistic. As a wise man once said, “check yoself befo yo wreck yoself”, I recommend more education thus

LLM structures arw over hyped, but they’re also not that simple

No the fuck it’s not

I’m a pretty big proponent of FOSS AI, but none of the models I’ve ever used are good enough to work without a human treating it like a tool to automate small tasks. In my workflow there is no difference between LLMs and fucking grep for me.

People who think AI codes well are shit at their job

We got baited by piece of shit journos

It’s a local model. It doesn’t send data over.

If they want call data they can buy it straight from service providers anyway